A common misconception about AI literacy is that it’s about skills – teaching students to use AI to run faster on existing academic trajectories, to achieve professional goals, or to perform at higher levels. While it certainly is important to know how to use AI in academic settings to accomplish specific tasks and assignments, this is merely scratching the surface of AI literacy.

Limiting your school’s definition of AI literacy to this narrow scope misses the critical idea behind high-quality AI literacy programmes and curricula: to teach students how to identify and cultivate their own voice (and by extension, their own identity) in a world that is increasingly saturated with the sameness of AI-generated content.

If you haven’t already been doing so, now is the time to start keeping your finger on the pulse of your students’ voice and expressions. Why? Because credible evidence is starting to emerge that demonstrates that words most commonly used by AI chatbots have started to infiltrate human language – a new slippery slope for any educational institution interested in nurturing holistic human beings.

A recent study found that AI-assisted writing (i.e. LLM-assisted writing) has penetrated multiple sectors of society at significant levels, beyond the K–12 sector. Approximately 18% of financial consumer complaints, up to 24% of corporate press releases, nearly 14% of United Nations press releases, and up to 15% of job postings showed significant use of AI assistance, mirroring similar widespread adoption that previous studies had found in academic research globally.

Other studies on LLMs and writing have found that LLMs tend to choose biased language that frames certain genders or people in skewed manner based on biased training data, including being predisposed to generate stories about gender normative topics (family for women, politics for men) when prompted to write a story about a male or female character; or associating certain words and attributes (like “friendly” or “weak”) with certain descriptions of characters’ appearances or demographic groups.

WHAT DOES IT MEAN?

First, there is the slow creep towards homogenisation: the risk that AI-assisted writing will, slowly but surely, make our writing more similar, and make our individual voices less unique, and perhaps even more biased. As LLM-inspired language continues to proliferate in the vicinity of our young writers, it is important to encourage them to choose their own words for what they are describing or depicting, with intention and thought, so that they do not default to perspectives provided by training data that scrapes every inch of the internet.

Second, there is the inevitable undertow of homogenisation: a potential loss of meaning and purpose. As the range of written expression narrows, so can the emotional complexity and experience of the individuals writing the words. When we lose words, we lose nuance. And once we lose our voice, our individuality and sense of purpose begins to erode, slowly but surely.

As a school leader, it is time to consider which kind of AI literacy programme your institution is investing in: one that teaches students to use AI as a shortcut and encourages mimicry to fulfil mid-term academic goals, or one that guides students to interrogate and affirm their own sense of identity as learners, using AI-augmented learning as a jumping-off point for discussions of lifelong learning and purpose? To explore further how AI-generated text reflects biases in the literary canon, see ‘Written in the Style of’: ChatGPT and the Literary Canon.

This piece is a contributed article shared by the author as part of our expert content series. Mila is the Founder of Kigumi Group and a certified AI ethics assessor by the Institute of Electrical and Electronics Engineers (IEEE).

AI-Related Education Technology Products / Services That May Be Of Interest:

Get a special discount by quoting code AISLMALL during CHECKOUT.

AI Tool for Content Research and Curation – Storm AI

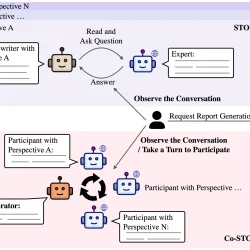

STORM is an artificial intelligence tool developed by researchers at Stanford University that can transform online information on a given topic into a coherent, well-structured text. STORM is the equivalent of having a team of researchers who curate content and present it in an organized and easy-to-understand format. It even provides references for every piece of data so that you know exactly where the information comes from. On its website, the creators describe it as an alternative to Wikipedia.

E-Learning Platform – KiguLab

KiguLab is the latest e-learning platform for K-12 students to learn digital wellbeing using self-paced 5-7 minute microlessons covering cybersafety, digital wellbeing and mental health, critical thinking online, AI ethics and more. Available in Chinese, English, Thai and other languages (with subtitles). Pair KiguLab with our multilingual AI Digital Parenting Coach to teach parents the same topics at home.

Redefining Personalised Learning – Nova AI Mentor

Novalearn Limited is an EdTech company transforming education by seamlessly integrating machine learning, studio-produced edutainment, and quality STEAM education aligned with international K-12 curricula. We empower students and support educators by equipping them with the skills and tools they need for success. From children and parents at home to school leaders and teachers, we strive to ensure effective, engaging, and accessible learning and teaching for all.